Using Docker Behind a Proxy

In today’s article, I am going to explore a common pain point for anyone running Docker in a large corporate environment. Today I’ll show how to use Docker without direct internet access.

By default, Docker assumes that the system running Docker and executing Docker commands has general access to the internet. Often in large corporate networks this is simply not the case. More often than not, a corporate network will route all internet traffic through a proxy.

While this setup is generally transparent to the end user, this type of network environment often neglects command line tools such as Docker. For today’s article, we will use Docker to build and run a simple container within a “locked down” network.

A Simple Example of Working with a Proxy

In this article, we will be building a simple ubuntu-based container that uses apt-get to install curl. While this example is simple, it will require us to leverage a proxy in several ways.

Before jumping into the proxy settings however, let’s take a quick look at the Dockerfile we will be building from.

FROM ubuntu RUN apt-get update && apt-get install -y curl

The above Dockerfile only has two instructions: FROM, which is used to specify which base image to build the container from, and RUN. RUN is used to execute commands, in this case apt-get commands.

Let’s go ahead and attempt to execute a docker build to see what happens by default in a “locked down” network.

$ sudo docker build -t curl . Sending build context to Docker daemon 12.29 kB Sending build context to Docker daemon Step 0 : FROM ubuntu Pulling repository ubuntu INFO[0002] Get https://index.docker.io/v1/repositories/library/ubuntu/images: dial tcp 52.22.146.88:443: connection refused

From the above execution, we can see that the docker build results in a download error of the base ubuntu image. The reason is also pretty obvious: access to docker.io was blocked.

In order for this to work within our network, Docker will need to route its request through a proxy. This will allow Docker to indirectly communicate with docker.io.

Configuring Docker to Use a Proxy

On Ubuntu (which is the OS our Docker host is running), we can configure Docker to do this by simply editing the /etc/default/docker file.

# If you need Docker to use an HTTP proxy, it can also be specified here. #export http_proxy="http://127.0.0.1:3128/"

Within the /etc/default/docker file, there is a line that is commented by default which specifies the http_proxy environmental variable.

In order to route Docker traffic through a proxy, we will need to uncomment this line and replace the default value with our proxy address.

# If you need Docker to use an HTTP proxy, it can also be specified here. export http_proxy="http://192.168.33.10:3128/"

In the above, we specify using the proxy located at 192.168.33.10:3128. This proxy is a simple Squid proxy created using the sameersbn/squid:3.3.8-23 Docker container.

This proxy is not currently set up for username– or password-based authentication. However, if it was we could add those details using the https://username:password@192.168.33.10:3128/ format.

With changes made to /etc/default/docker, we need to restart the Docker service before our changes will take effect.

We can do this by executing the service command.

# service docker restart docker stop/waiting docker start/running, process 32703

With our changes in effect, let’s go ahead and see what happens when executing a docker build command.

$ sudo docker build -t curl . Sending build context to Docker daemon 10.75 kB Sending build context to Docker daemon Step 0 : FROM ubuntu latest: Pulling from ubuntu 2a2723a6e328: Pull complete fa1b78b7309e: Pull complete 0ce8ddcea421: Pull complete c4cb212fd6fe: Pull complete 109d5efde28a: Pull complete 7f06d5cab2df: Pull complete Digest: sha256:f649e49c1ed34607912626a152efbc23238678af1ac37859b6223ef28e711a3f Status: Downloaded newer image for ubuntu:latest ---> 7f06d5cab2df Step 1 : RUN apt-get update && apt-get install -y curl ---> Running in 39b964be90bc Err:1 http://archive.ubuntu.com/ubuntu xenial InRelease Cannot initiate the connection to archive.ubuntu.com:80 (2001:67c:1360:8001::17). - connect (101: Network is unreachable) [IP: 2001:67c:1360:8001::17 80] W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/xenial/InRelease Cannot initiate the connection to archive.ubuntu.com:80 (2001:67c:1360:8001::17). - connect (101: Network is unreachable) [IP: 2001:67c:1360:8001::17 80] W: Some index files failed to download. They have been ignored, or old ones used instead. Reading package lists... Building dependency tree... Reading state information... E: Unable to locate package curl INFO[0013] The command [/bin/sh -c apt-get update && apt-get install -y curl] returned a non-zero code: 100

From the above build output, we can see that Docker was able to pull the ubuntu image successfully. However, our build still failed due to connectivity issues.

Specifically, our build failed during the apt-get command execution.

The reason the build failed is because even though we configured Docker itself to use a proxy, the operating environment within the container is not configured to use a proxy. This means when apt-get was executed within the container, it attempted to go directly to the internet without routing through the proxy.

In cases like this, it is important to remember that sometimes containers need to be treated in the same way we would treat any other system. The internal container environment works as if it is independent of the host system. That means configurations that exist on the host do not necessarily exist within the container. Our proxy is a prime example of that in practice.

To that end, if we were to stand up a physical or virtual machine with Ubuntu installed in this network environment, we would need to configure apt-get on that system to utilize a proxy. Just like we configured Docker to utilize a proxy.

The same is true for the container we are building. Luckily, configuring a proxy for apt-get is pretty easy.

Configuring apt-get to Use a Proxy

We simply need to set the http_proxy and https_proxy environmental variables during build time. We can do this with the docker build command itself.

$ sudo docker build -t curl --build-arg http_proxy=http://192.168.33.10:3128 .

Or we can specify the http_proxy value using the ENV instruction within the Dockerfile.

FROM ubuntu ENV http_proxy "http://192.168.33.10:3128" ENV https_proxy "http://192.168.33.10:3128" RUN apt-get update && apt-get install -y curl

Both options are useful in different situations, but for this article we will specify the values within our Dockerfile.

Testing the proxy settings

With the http_proxy and https_proxy environmental variables added, let’s rerun our build.

$ sudo docker build -t curl . Sending build context to Docker daemon 10.75 kB Sending build context to Docker daemon Step 0 : FROM ubuntu latest: Pulling from ubuntu 2a2723a6e328: Pull complete fa1b78b7309e: Pull complete 0ce8ddcea421: Pull complete c4cb212fd6fe: Pull complete 109d5efde28a: Pull complete 7f06d5cab2df: Pull complete Digest: sha256:f649e49c1ed34607912626a152efbc23238678af1ac37859b6223ef28e711a3f Status: Downloaded newer image for ubuntu:latest ---> 7f06d5cab2df Step 1 : ENV http_proxy "http://192.168.33.10:3128" ---> Running in 6d66226a7cbf ---> 89fab90c9ca2 Removing intermediate container 6d66226a7cbf Step 2 : ENV https_proxy "http://192.168.33.10:3128" ---> Running in ce80b9502a59 ---> c490a0c49b2b Removing intermediate container ce80b9502a59 Step 3 : RUN apt-get update && apt-get install -y curl ---> Running in 2eb328075f7e Get:1 http://archive.ubuntu.com/ubuntu xenial InRelease [247 kB] Get:2 http://archive.ubuntu.com/ubuntu xenial-updates InRelease [102 kB] Get:3 http://archive.ubuntu.com/ubuntu xenial-security InRelease [102 kB] Get:4 http://archive.ubuntu.com/ubuntu xenial/main Sources [1103 kB] Get:5 http://archive.ubuntu.com/ubuntu xenial/restricted Sources [5179 B]

From the above, it appears our build was successful. Let’s go ahead and run this container.

$ sudo docker run curl curl -v http://blog.codeship.com * Rebuilt URL to: http://blog.codeship.com/ * Trying 192.168.33.10... > GET http://blog.codeship.com/ HTTP/1.1 > Host: blog.codeship.com > User-Agent: curl/7.47.0 > Accept: */* > Proxy-Connection: Keep-Alive > < HTTP/1.1 301 Moved Permanently < Server: nginx < Date: Wed, 22 Mar 2017 00:45:04 GMT < Content-Type: text/html < Content-Length: 178 < Location: https://blog.codeship.com/

With the above curl command output, we can see that our request to http://blog.codeship.com was also proxied through the 192.168.33.10 proxy server. The reason for this is based on the way we specified the proxies within the container. The curl command like apt-get, uses the http_proxy and https_proxy environmental variables.

It is important to remember that these values not only affect the container during build but also during execution. If for some reason we did not want to use this proxy during the execution of the container, we would need to reset the http_proxy and https_proxy values by either passing new values during docker run execution or by changing our Dockerfile to match the below.

FROM ubuntu ENV http_proxy "http://192.168.33.10:3128" ENV https_proxy "http://192.168.33.10:3128" RUN apt-get update && apt-get install -y curl ENV http_proxy "" ENV https_proxy ""

With the above, when the container is launched with docker run, the http_proxy and https_proxy values will be unset, allowing the container to route traffic without going through a proxy.

Summary

In this article, we covered how to configure Docker (on Ubuntu) to use a proxy to download container images. We also explored how to configure apt-get within the container to use a proxy and why it is necessary.

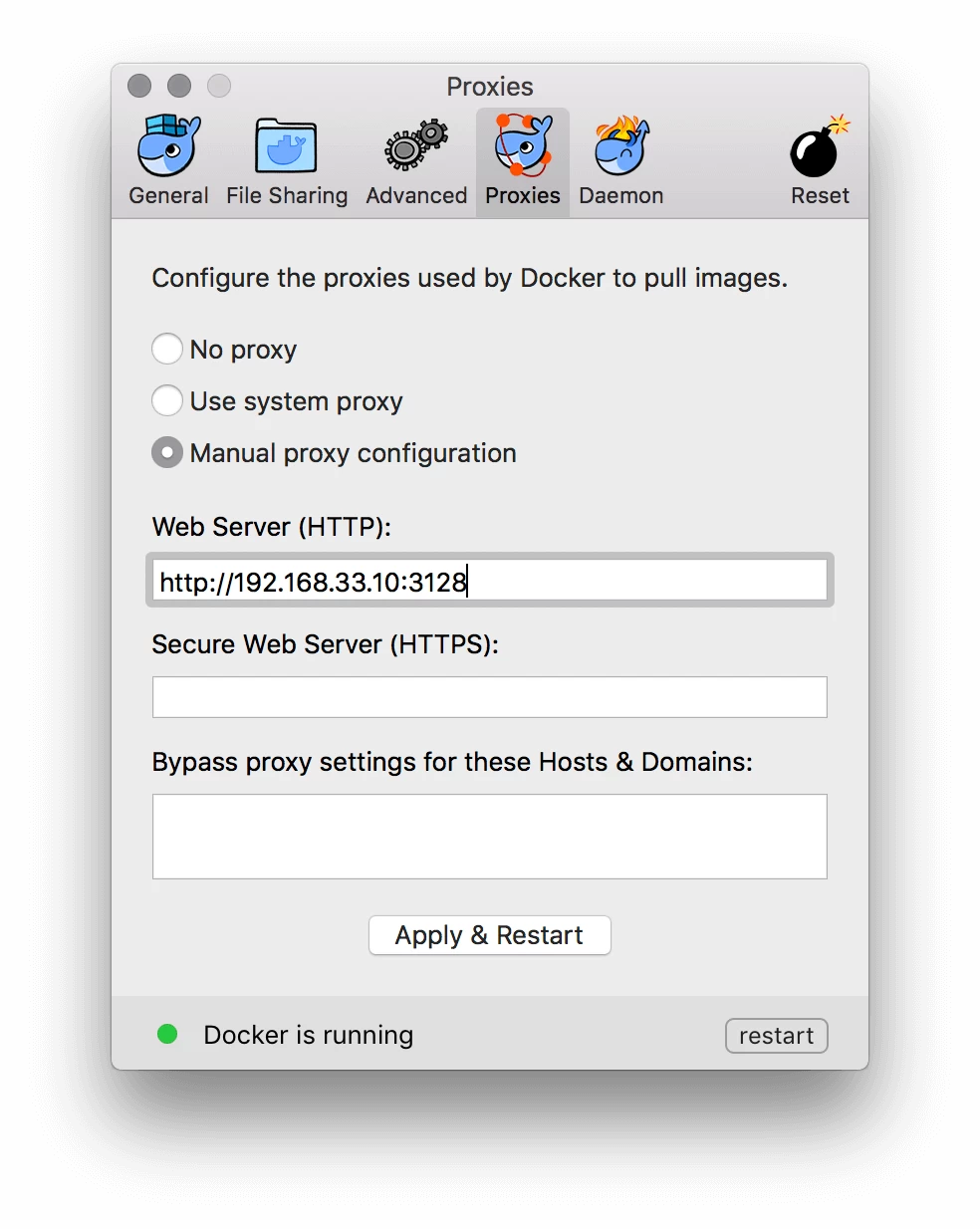

While this is useful for Ubuntu laptops and hosts running Docker, many people use the Docker for Mac & Windows application. Luckily, the proxy configuration for Docker for Mac & Windows is pretty easy as well. In fact, it is part of the Docker preferences configuration.

In the Docker preferences, there is an option for Proxies. If you simply click this option, you can add both an HTTP and HTTPS proxy using the Manual proxy configuration option.

This setting will allow you to pull images from docker.io, however, it does not replace configuring the proxy within the container. That step is required regardless of where the container is built

| Reference: | Using Docker Behind a Proxy from our WCG partner Ben Cane at the Codeship Blog blog. |